We have said in a previous post that the LSRL represents the best possible fitting line for the data association. But an LSRL, while best possible, might still not be very good. How do we tell?

Continuing with our Smoking Rate versus Lung Cancer Rate example that we have been discussing, we found that the correlation was 0.94 (excellent) and the LSRL equation was:

To judge the quality of our LSRL, we need to check two things: the residuals and R-squared. Let's take them one at a time.

Residuals

A residual represents, for each x-value, how different the actual y-value is from the predicted value, y-hat. In other words, a residual is the distance between an actual y-value for a given x and the predicted y-value from the regression equation. In the graph below, I have identified two residuals, in orange. There is one residual for each x-value in the dataset; I just chose these two to keep it simple. Math-wise, a residual equals the actual y-value minus y-hat, the predicted y-value from the LSRL equation.

We find the residuals by plugging each x-value (smoking rates in our case) into the regression equation. That gives us a y-hat for each x. Then we subtract the y-hat value from the original y-value to get the residual. Here is a table of the residual calculations.

Now that we have our residuals, we graph them against the original x-values in a scatterplot. If a regression is good quality, this scatterplot will have no pattern and look completely random. Here is our residual plot:

While there aren't a lot of points in this plot, we can say that there is no discernable pattern, so we can proceed to our other criterion: R-squared.

R-Squared

When we square r, our correlation, we get a statistic called the Coefficient of Determination, or R-Squared. Ours is 0.94*0.94 = .8836. We read R-squared as a percent: about 88.4%.

In reality, other variables contribute to the lung cancer rate than just the smoking rate: heredity, exposure to pollution levels, and the like. R-squared tells us the percent that our explanatory variable, smoking rate, contributes to the association. We say that smoking rate accounts for 88.4% of the variation in lung cancer rate in the linear relationship. That means that other variables, known or unknown, account for the remaining 12%. Not bad at all.

Because our residuals plot looks random and our R-squared value is high, we can say our regression is of high quality and feel confident using the LSRL equation to predict lung cancer rates from smoking rates, within the boundaries of our data. That means all is well if we're dealing with smoking rates between 19.7 and 23.3 people per 100,000.

What happens if we get a pattern in our residuals plot or a low R-Squared value? First, let me point out that you can get one without the other, or you can get both. In either case, your data isn't as linear as it appears and it might not be appropriate to use linear regression unless you can restate your data in a way that makes it more linear. That will be the subject of a very-near future post.

Statistics Without Tears

What is Statistics? Why is it one of the most misunderstood, misused, and underappreciated mathematics fields? Read on to learn basic statistics and see it in action!

How's it going so far?

Saturday, October 22, 2011

Saturday, October 15, 2011

Correlation and Linear Regression

When two quantitative variables have a linear relationship, we classify the association as strong, moderate, or weak. However, we can also quantify the strength and direction of a linear association between two quantitative variables. To do this, we find the correlation.

We'll continue with our example from the previous post: smoking rates versus lung cancer rates from 1999 through 2007. Below are the data:

We made a scatterplot and established that the path looked linear and very strongly positive:

There is, of course, a formula for finding the correlation between two variables. However, thanks to graphing calculators and other tools like MS-Excel, we can find the correlation much more easily. First, a bit more about correlation...

-- Correlation is a number between -1 and +1. It is called "r."

-- The sign of the correlation indicates whether the association is positive (upward from left

to right) or negative (downward from left to right)

-- A correlation of -1 or +1 indicates a perfect association; that is, the scatterplot points could

be joined to form a straight line.

-- A correlation of 0 means that there is absolutely no association between the variables.

-- Correlation has no units associated with it -- it's simply a number.

-- The order of the variables doesn't matter; their correlation is the same either way.

-- If you perform an arithmetic operation on each of the data values in one or both sets, the

value of the correlation is unchanged.

Below shows the MS-Excel way to find correlation. See the highlighted line.

0.94 (rounded) is nearly perfect.

Linear Regression

When an association is linear, we can find the equation of a line that best fits the numbers in the data sets. While there are many lines that look like they fit the data points well, there is only one "best" fitting line. This line is called the least squares regression line, or LSRL.

Like any line, the LSRL is in the form of y = a + bx, where "a" is the y-intercept and "b" is the slope. This line won't run through each point in the scatterplot, but be as close as possible to doing so. In fact, if we were to measure the distances between each of our points and the point on the regression line that has the same x-coordinates, the sum of these squared differences would be less than for any other line. That's what we mean by "best fit" -- what is meant by "least squares."

Rather than get hung up on the "least squares" idea right now, let's remember to come back to it later. Right now, let's find the LSRL equation for our smoking/lung cancer data. First, assuming your scatterplot is in MS-Excel, right-click on any point in your graph and select "Add Trendline" from the drop-down menu. You will see the following sub-menu.

You will see the following sub-menu. Be sure you select "Linear" and "Display equation":

The LSRL equation, in y = a + bx format and with x and y replaced by their meanings, is:

The inverted "V" symbol over the response variable, LungCancerRate, is called a "hat" and means that the value is a prediction. That is, the regression line equation is a way of predicting a lung cancer rate from a given smoking rate. In fact, this is exactly why we're interested in regression equations -- to help us predict new values based on this best fitting line equation. But predicting comes with cautions. Because the data that produced this equation spans from 1999 to 2007, it is not prudent, nor is it reliable, to predict before or beyond these years -- only in between them. We cannot predict the future based on the past!

We'll continue with our example from the previous post: smoking rates versus lung cancer rates from 1999 through 2007. Below are the data:

We made a scatterplot and established that the path looked linear and very strongly positive:

There is, of course, a formula for finding the correlation between two variables. However, thanks to graphing calculators and other tools like MS-Excel, we can find the correlation much more easily. First, a bit more about correlation...

-- Correlation is a number between -1 and +1. It is called "r."

-- The sign of the correlation indicates whether the association is positive (upward from left

to right) or negative (downward from left to right)

-- A correlation of -1 or +1 indicates a perfect association; that is, the scatterplot points could

be joined to form a straight line.

-- A correlation of 0 means that there is absolutely no association between the variables.

-- Correlation has no units associated with it -- it's simply a number.

-- The order of the variables doesn't matter; their correlation is the same either way.

-- If you perform an arithmetic operation on each of the data values in one or both sets, the

value of the correlation is unchanged.

Below shows the MS-Excel way to find correlation. See the highlighted line.

0.94 (rounded) is nearly perfect.

Linear Regression

When an association is linear, we can find the equation of a line that best fits the numbers in the data sets. While there are many lines that look like they fit the data points well, there is only one "best" fitting line. This line is called the least squares regression line, or LSRL.

Like any line, the LSRL is in the form of y = a + bx, where "a" is the y-intercept and "b" is the slope. This line won't run through each point in the scatterplot, but be as close as possible to doing so. In fact, if we were to measure the distances between each of our points and the point on the regression line that has the same x-coordinates, the sum of these squared differences would be less than for any other line. That's what we mean by "best fit" -- what is meant by "least squares."

Rather than get hung up on the "least squares" idea right now, let's remember to come back to it later. Right now, let's find the LSRL equation for our smoking/lung cancer data. First, assuming your scatterplot is in MS-Excel, right-click on any point in your graph and select "Add Trendline" from the drop-down menu. You will see the following sub-menu.

You will see the following sub-menu. Be sure you select "Linear" and "Display equation":

When you close the above window, your trendline and equation will appear:

(Self-Test): Suppose that in your state, the smoking rate during one of these years was 23.75 per 100,000 people. What would you predict the lung cancer rate to be?

(Answer): You would plug 23.75, your x-value, into the LSRL equation and solve for the y-value...

y-hat = 22.042 + 3.0566*(23.75)

= 22.042 + 72.594

= 94.636

The lung cancer rate is predicted to be 94.636 per 100,000 people.

Interpreting the Y-intercept and Slope

It is often useful to interpret the slope and y-intercept in the context of the situation. For instance, the y-intercept is the value produced when x is zero. In our context, x stands for the smoking rate. So, we can say that when the smoking rate is zero -- that is, when a person doesn't smoke -- the lung cancer incidence was 22.042 per 100,000 during these years.

Now for the slope. Remember that the slope is a ratio capturing the change in the y-variable versus the change in x. In our example, that would be the change in lung cancer rates as smoking rates change. The slope in our LSRL equation is 3.0566, which we can think of as 3.0566 / 1. The change in lung cancer rates is the numerator; the change in smoking rate is the denominator. Interpreting this in the context of our situation, we can say that for each 1 in 100,000 that smokes, the lung cancer rate increased by 3.0566 per 100,000 during those years.

Sunday, September 18, 2011

Associations Between Two Variables

Up till now, we've been analyzing one set of quantitative data values, discussing center, shape, spread, and their measures. Sometimes two different sets of quantitative data values have something to do with each other, or so we suspect. This post (and a few of those that follow), deal with how to analyze two sets of data that appear to be related to each other.

We all know now that smoking causes lung cancer. This fact took years and lots of statistical work to establish. The first step was to see if there was a connection between smoking and lung cancer. This was done by charting how many people smoked and how many people got lung cancer. This is where we'll start, only we'll do it with more current data for purposes of illustration. Below is a table showing the incidence rates of smoking and lung cancer per 100,000 people, as tracked by the Centers for Disease Control and Prevention, from 1999 - 2007.

Because smoking explains lung cancer incidence and not the other way around, we call the smoking rate variable the explanatory variable. The lung cancer rate variable is called the response variable.

Looking over this table, we can see that smoking rates -- and lung cancer rates -- have both decreased during these 9 years. To illustrate how we deal with two variables at a time, let's continue with this data. The first thing to do is to plot the data on an xy-grid, one point per year. For 1999, for example, we plot the point (23.3, 93.5). Continuing with all the points, we get a scatterplot:

Note that the explanatory variable is on the x-axis, while the response variable is on the y-axis.

When you view a scatterplot, you are looking for a straight-line, or linear, trend. What I do is sketch the narrowest possible oval around the dots. The narrower the oval, the stronger the linear relationship between the variables. As you can see below, the relationship between smoking and lung cancer is quite strong because the oval is skinny:

If our oval had looked more like a circle, we would conclude that there is no relationship. A fatter oval that has a discernable upward or downward direction would indicate a weak association.

This upward-reaching oval also tells us that the association is positive; that is, that as one variable increases, so does the other one. A downward-reaching oval would indicate a negative association, which means that as one variable increases, the other decreases.

When describing an association between two quantitative variables, we address form, strength, and direction. The form is linear, strength is strong, and direction is positive. So we would say, "The association between smoking and lung cancer between 1999 and 2007 is strong, positive, and linear."

Just so you know, there are other forms of association between two quantitative variables: quadratic (U-shaped), exponential, logarithmic, etc. But we limit ourselves for now to linear associations.

Another *really* important thing to remember is that just because there is a linear association between two quantitative variables, it doesn't necessarily mean that one variable causes the other. We know that in the case of smoking and lung cancer, there is a cause-effect relationship, but this was established by several controlled statistical experiments. Seeing the linear association was only the catalyst for further study...it wasn't the culmination!

In the next post, we'll continue with this same example to develop some of the finer points of analyzing associations between two quantitative variables. For now, here is a good term to know: Another way to say the end of that previous sentence is "...analyzing associations for bivariate data." Bivariate simply means "two variables."

We all know now that smoking causes lung cancer. This fact took years and lots of statistical work to establish. The first step was to see if there was a connection between smoking and lung cancer. This was done by charting how many people smoked and how many people got lung cancer. This is where we'll start, only we'll do it with more current data for purposes of illustration. Below is a table showing the incidence rates of smoking and lung cancer per 100,000 people, as tracked by the Centers for Disease Control and Prevention, from 1999 - 2007.

Because smoking explains lung cancer incidence and not the other way around, we call the smoking rate variable the explanatory variable. The lung cancer rate variable is called the response variable.

Looking over this table, we can see that smoking rates -- and lung cancer rates -- have both decreased during these 9 years. To illustrate how we deal with two variables at a time, let's continue with this data. The first thing to do is to plot the data on an xy-grid, one point per year. For 1999, for example, we plot the point (23.3, 93.5). Continuing with all the points, we get a scatterplot:

Note that the explanatory variable is on the x-axis, while the response variable is on the y-axis.

When you view a scatterplot, you are looking for a straight-line, or linear, trend. What I do is sketch the narrowest possible oval around the dots. The narrower the oval, the stronger the linear relationship between the variables. As you can see below, the relationship between smoking and lung cancer is quite strong because the oval is skinny:

If our oval had looked more like a circle, we would conclude that there is no relationship. A fatter oval that has a discernable upward or downward direction would indicate a weak association.

This upward-reaching oval also tells us that the association is positive; that is, that as one variable increases, so does the other one. A downward-reaching oval would indicate a negative association, which means that as one variable increases, the other decreases.

When describing an association between two quantitative variables, we address form, strength, and direction. The form is linear, strength is strong, and direction is positive. So we would say, "The association between smoking and lung cancer between 1999 and 2007 is strong, positive, and linear."

Just so you know, there are other forms of association between two quantitative variables: quadratic (U-shaped), exponential, logarithmic, etc. But we limit ourselves for now to linear associations.

Another *really* important thing to remember is that just because there is a linear association between two quantitative variables, it doesn't necessarily mean that one variable causes the other. We know that in the case of smoking and lung cancer, there is a cause-effect relationship, but this was established by several controlled statistical experiments. Seeing the linear association was only the catalyst for further study...it wasn't the culmination!

In the next post, we'll continue with this same example to develop some of the finer points of analyzing associations between two quantitative variables. For now, here is a good term to know: Another way to say the end of that previous sentence is "...analyzing associations for bivariate data." Bivariate simply means "two variables."

Monday, September 5, 2011

The Standard Normal Model: Standardizing Scores

For the purposes of this post, we will refer to the data values in a data set as "scores."

In the last post, we used an example N(14, 2) to illustrate the 68-95-99.7 Rule, which stands for the various percents of scores lying within 1, 2, and 3 standard deviations from the mean. We can generalize the diagram we used to represent N(0, 1), where 0 is the mean and 1 is the standard deviation. This makes the model easier to apply, because the units we're most accustomed to seeing -- -1, 0,1,2, and so on -- appear as standard deviation units. Take a look:

We call N(0,1) the Standard Normal model. We now can use the number line to locate points that are any number of standard deviations from the mean...even fractional numbers.

In any Normal model, we're going to want to see what how many standard deviations a particular score in the data set is from its mean. We can do this for any score, and it has to do with converting a "raw" score (a score from our data) to a "standardized" score (a score from the Standard Normal model. How do we do this? There's a formula, and it's really an easy one:

...where

X stands for the score you're trying to convert,

stands for the mean, and

stands for the standard deviation.

Suppose we're back in the Normal model N(14, 2) and we want to see how many standard deviations a score of 15 is from the mean. We would subtract our mean from 15, then divide by the standard deviation, 2. That is: (15 - 14) / 2 = 0.5. This mean that our score of 15 is 0.5 standard deviations from the mean. 0.5 is the score on the Standard Normal model that represents our score from N(14, 2). We call 0.5 our standardized score, also known as a z-score. Z-scores tell us how many standard deviations a given "raw" score is from the mean.

(Self-Test): In the Normal model N(50, 4), standardize a score of 55.

(Answer): Find the z-score using the formula:

z = (55 - 50) / 4 = 5 / 4 = 1.25. The score is 1.25 standard deviations above the mean.

(Self-Test): In the Normal model N(50, 4), find the z-score for 42.

(Answer): z-scores can be negative, too. in this problem, 42 is less than the mean. So it will lie to the left of 50, and its z-score will be negative. z = (42 - 50) / 4 = -8 / 4 = -2. The score is 2 standard deviations below the mean.

Why would we want to standardize our scores? There are actually two reasons.

1. It can help us see how unusual a score might be.

How? Well, the percents we have been talking about can also be thought of as probabilities. For example, the probability that a score is greater than the mean is 50%, the same as the probability that a score is less than the mean. In the last post, we marked off regions and computed percents. In statistics, we consider any z-score of 3 or more, or -3 or less, as unusual, because (as we saw in the last post) only 0.15% of scores are in each of those regions. In other words, the probability of seeing a score in one of these regions is at most 0.15%, less than even a quarter-percent. That's unusual.

2. It can allow us to compare apples to oranges.

How? Well, suppose you have just gotten back 2 tests you took: one in algebra and one in earth science. Suppose further that both score distributions follow a [different] Normal model. The algebra test's scores follow N(80, 5) and the earth science test's scores follow N(85, 8). Now imagine that you got a 90 on the algebra test and a 93 on the earth science test. You can easily see that percentage-wise, your score on the earth science test is higher than your algebra score. But relative to the distributions, on which test did you perform better?

To find out, figure out the z-score for each test score. For your algebra test, your z-score is (90 - 80) / 5 = 10 / 5 = 2 (2 standard deviations above the mean). For your earth science test, your z-score is (93 - 85) / 8 = 8 / 8 = 1 (1 standard deviation above the mean. Relatively speaking, your performance was better on the algebra test; that is, your score was more exceptional. Think of the probabilities. The probability of a score that's 2 standard deviations or more above the mean is 2.5%, whereas a score that's 1 standard deviation or more above the mean is 16%. Get the idea?

(Self-Test) Suppose Tom's algebra test score was 86 and his earth science test score was 86. In which test did Tom perform better, given that the test scores follow the Normal models we used above?

(Answer): Tom's z-score on the algebra test was z = (86 - 80) / 5 = 6/5 = 1.20. His z-score on the earth science test was z = (86 - 85) / 8 = 1/8 = 0.125. Because Tom's z-score on his algebra test (1.20) is higher than his earth science test z-score (0.125), his algebra performance was better than his earth science performance.

One more thing...let's use the z-score formula to go backwards: to convert a standardized score back to a raw score. Suppose, Nancy earned a score on the algebra test that was 1.6 standard deviations below the mean. (In other words, her z-score was 1.6.) What actual percentage score would that represent for her, assuming N(80, 5)? In this case, we would start with the z-score formula and fill in what we know. Then, using algebra (!) we would solve for the "raw" score...

Nancy's algebra test score was 72.

In the next post, we'll expand on the probability side of the Standard Normal model.

In the last post, we used an example N(14, 2) to illustrate the 68-95-99.7 Rule, which stands for the various percents of scores lying within 1, 2, and 3 standard deviations from the mean. We can generalize the diagram we used to represent N(0, 1), where 0 is the mean and 1 is the standard deviation. This makes the model easier to apply, because the units we're most accustomed to seeing -- -1, 0,1,2, and so on -- appear as standard deviation units. Take a look:

We call N(0,1) the Standard Normal model. We now can use the number line to locate points that are any number of standard deviations from the mean...even fractional numbers.

In any Normal model, we're going to want to see what how many standard deviations a particular score in the data set is from its mean. We can do this for any score, and it has to do with converting a "raw" score (a score from our data) to a "standardized" score (a score from the Standard Normal model. How do we do this? There's a formula, and it's really an easy one:

...where

X stands for the score you're trying to convert,

stands for the mean, and

stands for the standard deviation.

Suppose we're back in the Normal model N(14, 2) and we want to see how many standard deviations a score of 15 is from the mean. We would subtract our mean from 15, then divide by the standard deviation, 2. That is: (15 - 14) / 2 = 0.5. This mean that our score of 15 is 0.5 standard deviations from the mean. 0.5 is the score on the Standard Normal model that represents our score from N(14, 2). We call 0.5 our standardized score, also known as a z-score. Z-scores tell us how many standard deviations a given "raw" score is from the mean.

(Self-Test): In the Normal model N(50, 4), standardize a score of 55.

(Answer): Find the z-score using the formula:

z = (55 - 50) / 4 = 5 / 4 = 1.25. The score is 1.25 standard deviations above the mean.

(Self-Test): In the Normal model N(50, 4), find the z-score for 42.

(Answer): z-scores can be negative, too. in this problem, 42 is less than the mean. So it will lie to the left of 50, and its z-score will be negative. z = (42 - 50) / 4 = -8 / 4 = -2. The score is 2 standard deviations below the mean.

Why would we want to standardize our scores? There are actually two reasons.

1. It can help us see how unusual a score might be.

How? Well, the percents we have been talking about can also be thought of as probabilities. For example, the probability that a score is greater than the mean is 50%, the same as the probability that a score is less than the mean. In the last post, we marked off regions and computed percents. In statistics, we consider any z-score of 3 or more, or -3 or less, as unusual, because (as we saw in the last post) only 0.15% of scores are in each of those regions. In other words, the probability of seeing a score in one of these regions is at most 0.15%, less than even a quarter-percent. That's unusual.

2. It can allow us to compare apples to oranges.

How? Well, suppose you have just gotten back 2 tests you took: one in algebra and one in earth science. Suppose further that both score distributions follow a [different] Normal model. The algebra test's scores follow N(80, 5) and the earth science test's scores follow N(85, 8). Now imagine that you got a 90 on the algebra test and a 93 on the earth science test. You can easily see that percentage-wise, your score on the earth science test is higher than your algebra score. But relative to the distributions, on which test did you perform better?

To find out, figure out the z-score for each test score. For your algebra test, your z-score is (90 - 80) / 5 = 10 / 5 = 2 (2 standard deviations above the mean). For your earth science test, your z-score is (93 - 85) / 8 = 8 / 8 = 1 (1 standard deviation above the mean. Relatively speaking, your performance was better on the algebra test; that is, your score was more exceptional. Think of the probabilities. The probability of a score that's 2 standard deviations or more above the mean is 2.5%, whereas a score that's 1 standard deviation or more above the mean is 16%. Get the idea?

(Self-Test) Suppose Tom's algebra test score was 86 and his earth science test score was 86. In which test did Tom perform better, given that the test scores follow the Normal models we used above?

(Answer): Tom's z-score on the algebra test was z = (86 - 80) / 5 = 6/5 = 1.20. His z-score on the earth science test was z = (86 - 85) / 8 = 1/8 = 0.125. Because Tom's z-score on his algebra test (1.20) is higher than his earth science test z-score (0.125), his algebra performance was better than his earth science performance.

One more thing...let's use the z-score formula to go backwards: to convert a standardized score back to a raw score. Suppose, Nancy earned a score on the algebra test that was 1.6 standard deviations below the mean. (In other words, her z-score was 1.6.) What actual percentage score would that represent for her, assuming N(80, 5)? In this case, we would start with the z-score formula and fill in what we know. Then, using algebra (!) we would solve for the "raw" score...

Nancy's algebra test score was 72.

In the next post, we'll expand on the probability side of the Standard Normal model.

Wednesday, August 31, 2011

More About the Normal Distribution: the 68-95-99.7 Rule

In the last post, we covered the Normal distribution as it relates to the standard deviation. We said that the Normal distribution is really a family of unimodal, symmetric distributions that differ only by their means and standard deviations. Now is a good time to introduce a new term: Parameter. When dealing with perfect-world models like the Normal model, their major measures -- in this case, their mean and standard deviation -- are called parameters. The mean (denoted by the Greek letter "mu" (pronounced "mew"), µ, and the standard deviation is denoted by the Greek letter sigma, σ. We can refer to a particular normal model by identifying µ and σ and using the letter N, for "Normal:" N(µ, σ). For example, if I want to describe a Normal model whose mean is 14 and whose standard deviation is 2, I use the notation N(14, 2).

Every Normal model has some pretty interesting properties, which we will now cover. Take the above model N(14,2). We'll draw it on a number line centered at 14, with units of 2 marked off in either direction. Each unit of 2 is one standard deviation in length. It would look like this...

Now let's mark off the area that's between 12 and 16; that is, the area that's within one standard deviation of the mean. In a Normal model, this region will contain 68% of the data values:

If you've ever heard of "grading on a curve," it's based on Normal models. Scores within one standard deviation of the mean would generally be considered in the "C" grade range.

Now, if you consider the region that lies within two standard deviations from the mean; that is, between 10 and 18 in this model, this area would encompass 95% of all the data values in the data set:

From a grading curve standpoint, 95% of the values would be Bs or Cs. Finally, if you mark off the area within 3 standard deviations from the mean, this region will contain about 99.7% of the values in the data set. These extremities would be the As and Fs in our grading curve interpretation.

In statistics, this percentage breakdown is called the "Empirical Rule," or the "68-95-99.7 Rule."

What about the regions up to 8 and beyond 20? These areas account for the remaining 0.3% of the data values? That would make 0.15% on each side.

(Self-Test): What percent of data values lie between 12 and 14 in N(14,2) above?

(Answer): If 68% represents the full area between 12 and 16 and given that the Normal model is perfectly symmetric, there must be half of 68%, or 34% between 12 and 14. Likewise, there would be 34% between 14 and 16.

(Self-Test): What percent of data values lie between 16 and 18 in N(14,2) above?

(Answer): Subtract 95% minus 68% = 27%. This represents the number of data values between 10 and 12, and between 16 and 18 both. Divide by 2 and you get 13.5% in each of these regions.

If you do similar operations, the various areas break down like the following:

(Self-Test): In any Normal model what percent of data values are greater than 2 standard deviations from the mean?

(Answer): Using the above model, the question is asking for the percent of data values that are more than 18. You would add the 2.35% and the 0.15% to get the answer: 2.50%.

This is all well and good if you're looking at areas that involve a whole number of standard deviations, but what about all the in-between numbers? For example, what if you wanted to know what percent of data values are within 1.5 standard deviations of the mean? This will be the subject of a future post.

Every Normal model has some pretty interesting properties, which we will now cover. Take the above model N(14,2). We'll draw it on a number line centered at 14, with units of 2 marked off in either direction. Each unit of 2 is one standard deviation in length. It would look like this...

Now let's mark off the area that's between 12 and 16; that is, the area that's within one standard deviation of the mean. In a Normal model, this region will contain 68% of the data values:

If you've ever heard of "grading on a curve," it's based on Normal models. Scores within one standard deviation of the mean would generally be considered in the "C" grade range.

Now, if you consider the region that lies within two standard deviations from the mean; that is, between 10 and 18 in this model, this area would encompass 95% of all the data values in the data set:

From a grading curve standpoint, 95% of the values would be Bs or Cs. Finally, if you mark off the area within 3 standard deviations from the mean, this region will contain about 99.7% of the values in the data set. These extremities would be the As and Fs in our grading curve interpretation.

In statistics, this percentage breakdown is called the "Empirical Rule," or the "68-95-99.7 Rule."

What about the regions up to 8 and beyond 20? These areas account for the remaining 0.3% of the data values? That would make 0.15% on each side.

(Self-Test): What percent of data values lie between 12 and 14 in N(14,2) above?

(Answer): If 68% represents the full area between 12 and 16 and given that the Normal model is perfectly symmetric, there must be half of 68%, or 34% between 12 and 14. Likewise, there would be 34% between 14 and 16.

(Self-Test): What percent of data values lie between 16 and 18 in N(14,2) above?

(Answer): Subtract 95% minus 68% = 27%. This represents the number of data values between 10 and 12, and between 16 and 18 both. Divide by 2 and you get 13.5% in each of these regions.

If you do similar operations, the various areas break down like the following:

(Self-Test): In any Normal model what percent of data values are greater than 2 standard deviations from the mean?

(Answer): Using the above model, the question is asking for the percent of data values that are more than 18. You would add the 2.35% and the 0.15% to get the answer: 2.50%.

This is all well and good if you're looking at areas that involve a whole number of standard deviations, but what about all the in-between numbers? For example, what if you wanted to know what percent of data values are within 1.5 standard deviations of the mean? This will be the subject of a future post.

Labels:

mean,

Normal distribution,

Normal model,

parameter,

standard deviation,

µ,

σ

Saturday, August 27, 2011

More About Standard Deviation: The Normal Distribution

I've haven't said a lot about Standard Deviation up to now: not much more than the fact that it's a measure of spread for a symmetric dataset. However, we can also think of the standard deviation as a unit of measure of relative distance from the mean in a unimodal, symmetric distribution.

Suppose you had a perfectly symmetric, unimodal distribution. It would look like the well-known bell curve. Of course, in the real world, nothing is perfect. But in statistics, we talk about ideal distributions, known as "models." Real-life datasets can only approximate the ideal model...but we can apply many of the traits of models to them.

So let's talk about a perfectly symmetric, bell-shaped distribution for a bit. We call this model a Normal distribution, or Normal model. Because we're dealing with perfection, the mean and median are at the same point. In fact, there are an infinite number of Normal distributions with a particular mean. They only differ in width. Below are some examples of Normal models.

Notice that their widths differ. Another word for "width" is "spread"...which brings us back to Standard Deviation! Take a look at the curves above. In the center section, the shape looks like an upside down bowl, whereas the outer "legs" look like part of a right-side-up bowl. Now imagine the point at which the right-side-up parts meet the upside-down part. Look below for the two blue dots in the diagram. (P.S. They are called "points of inflection," in case you were wondering.)

As shown above, if a line is drawn down the center, the distance from that line to a blue point is the length of one standard deviation. Can you see which lengths of standard deviations in the earlier examples are larger? Smaller?

So, there are two measures that define how a particular Normal model will look: the mean and the standard deviation.

I'd be remiss if I didn't tell you that there is a formula for numerically finding the standard deviation. Luckily, there's a lot of technology out there that automatically computes this for you. (I showed you how to do this in MS-Excel in an earlier post.)

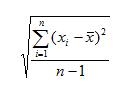

Suppose you have a list of "n" data values, and when you look at a histogram of these values, you see that the distribution is unimodal and roughly symmetric. If we call the values x1, x2, x3, etc. We compute the mean (average, remember?) and note it as x with a bar over it. Then the formula is:

Suppose you had a perfectly symmetric, unimodal distribution. It would look like the well-known bell curve. Of course, in the real world, nothing is perfect. But in statistics, we talk about ideal distributions, known as "models." Real-life datasets can only approximate the ideal model...but we can apply many of the traits of models to them.

So let's talk about a perfectly symmetric, bell-shaped distribution for a bit. We call this model a Normal distribution, or Normal model. Because we're dealing with perfection, the mean and median are at the same point. In fact, there are an infinite number of Normal distributions with a particular mean. They only differ in width. Below are some examples of Normal models.

Notice that their widths differ. Another word for "width" is "spread"...which brings us back to Standard Deviation! Take a look at the curves above. In the center section, the shape looks like an upside down bowl, whereas the outer "legs" look like part of a right-side-up bowl. Now imagine the point at which the right-side-up parts meet the upside-down part. Look below for the two blue dots in the diagram. (P.S. They are called "points of inflection," in case you were wondering.)

As shown above, if a line is drawn down the center, the distance from that line to a blue point is the length of one standard deviation. Can you see which lengths of standard deviations in the earlier examples are larger? Smaller?

So, there are two measures that define how a particular Normal model will look: the mean and the standard deviation.

I'd be remiss if I didn't tell you that there is a formula for numerically finding the standard deviation. Luckily, there's a lot of technology out there that automatically computes this for you. (I showed you how to do this in MS-Excel in an earlier post.)

Suppose you have a list of "n" data values, and when you look at a histogram of these values, you see that the distribution is unimodal and roughly symmetric. If we call the values x1, x2, x3, etc. We compute the mean (average, remember?) and note it as x with a bar over it. Then the formula is:

What does the ∑ mean? Let's take the formula apart. First, you are finding the difference between each data value and the mean of the whole dataset. You're squaring it to make sure you're dealing only with positive values. The ∑ means you should add up all those positive squared answers, one for each value in your dataset. Once you have the sum, you divide by

(n-1), which gives you an average of all the squared differences from the mean. This measure (before taking the square root) is called the variance. When you take the square root, you have the value of the standard deviation. So, you see, the standard deviation is the square root of the squared differences from the mean.

Well, that's a lot to digest, so I'll continue with the properties of the Normal model in my next post.

Labels:

bell curve,

mean,

median,

model,

Normal distribution,

Normal model,

points of inflection,

standard deviation,

variance

Saturday, August 20, 2011

Analyzing Quantitative Distributions

This post deals only with quantitative data.

When you have a quantitative dataset, it is always a good idea to look at a graphical display of it: usually a histogram or boxplot, although there are others. What you are looking to describe is the shape of the data (the subject of a recent post), the approximate center of the dataset, the spread of the values, and any unusual features (such as extremely low or high values -- outliers). We'll take them one at a time.

Shape

The shape of the dataset helps us determine how to report on the other features. We went into detail about shape in a very recent post ("The Shape of a Quantitative Distribution"). If the display is basically symmetric, you will use the mean to describe the center and a measure called the Standard deviation to describe the spread. If the display is non-symmetric, you will use the median to describe the center and the interquartile range to describe the spread. Here's a handy chart to summarize this.

Center

In a symmetric distribution the mean and median are at approximately the same place; however, statisticians use the mean. In a non-symmetric distribution, the median is used because by its very definition, it is not calculated using any extreme points or points that skew the calculation.

Spread

Notice that the range (which is the difference between the largest and smallest data value in the set) is not generally used to describe the spread. In a symmetric distribution we use the standard deviation, which has a complicated formula but a simple description: the Standard Deviation is the average squared difference between each value in the dataset and the mean of the dataset. You can find the standard deviation using a graphing calculator or a tool like MS-Excel. The symbol for Standard Deviation is

Standard deviations are relative. By that I mean that you can't tell just by looking at its value whether it's large or small -- it depends on the values in the dataset. Standard deviations are good for comparing spreads if you have two distributions. And, we'll soon find out that the standard deviation has an extremely important use in statistics. Here's an example for finding the mean and standard deviation using MS-Excel:

In a non-symmetric distribution we use the Interquartile Range (IQR) because this tells the spread of the central 50% of the data values. Like the median, the IQR isn't influenced by outliers or skewness. Here's an example of finding the median and IQR using MS-Excel:

Unusual Features

As mentioned above, unusual features include extreme points (if any), also known as outliers. In an earlier post, we covered how to determine the boundaries for outliers. If a data value lies outside the boundaries, we call it an outlier. If a value isn't quite an outlier but close, it's worth mentioning in a description of a distribution. Just call it an "extreme point."

When you have a quantitative dataset, it is always a good idea to look at a graphical display of it: usually a histogram or boxplot, although there are others. What you are looking to describe is the shape of the data (the subject of a recent post), the approximate center of the dataset, the spread of the values, and any unusual features (such as extremely low or high values -- outliers). We'll take them one at a time.

Shape

The shape of the dataset helps us determine how to report on the other features. We went into detail about shape in a very recent post ("The Shape of a Quantitative Distribution"). If the display is basically symmetric, you will use the mean to describe the center and a measure called the Standard deviation to describe the spread. If the display is non-symmetric, you will use the median to describe the center and the interquartile range to describe the spread. Here's a handy chart to summarize this.

Center

In a symmetric distribution the mean and median are at approximately the same place; however, statisticians use the mean. In a non-symmetric distribution, the median is used because by its very definition, it is not calculated using any extreme points or points that skew the calculation.

Spread

Notice that the range (which is the difference between the largest and smallest data value in the set) is not generally used to describe the spread. In a symmetric distribution we use the standard deviation, which has a complicated formula but a simple description: the Standard Deviation is the average squared difference between each value in the dataset and the mean of the dataset. You can find the standard deviation using a graphing calculator or a tool like MS-Excel. The symbol for Standard Deviation is

Standard deviations are relative. By that I mean that you can't tell just by looking at its value whether it's large or small -- it depends on the values in the dataset. Standard deviations are good for comparing spreads if you have two distributions. And, we'll soon find out that the standard deviation has an extremely important use in statistics. Here's an example for finding the mean and standard deviation using MS-Excel:

In a non-symmetric distribution we use the Interquartile Range (IQR) because this tells the spread of the central 50% of the data values. Like the median, the IQR isn't influenced by outliers or skewness. Here's an example of finding the median and IQR using MS-Excel:

Unusual Features

As mentioned above, unusual features include extreme points (if any), also known as outliers. In an earlier post, we covered how to determine the boundaries for outliers. If a data value lies outside the boundaries, we call it an outlier. If a value isn't quite an outlier but close, it's worth mentioning in a description of a distribution. Just call it an "extreme point."

Labels:

center,

interquartile range,

IQR,

outliers,

shape,

spread,

standard deviation

Subscribe to:

Posts (Atom)